One of Wwise’s few shortcomings is its current lack of support for LFOs. Modulation can be a godsend to make looping static sounds feel way more dynamic and alive. (an example using volume, pitch, and lpf is here). I wanted to outline two different means here you can “cheat” modulation in Wwise using some technical trickery.

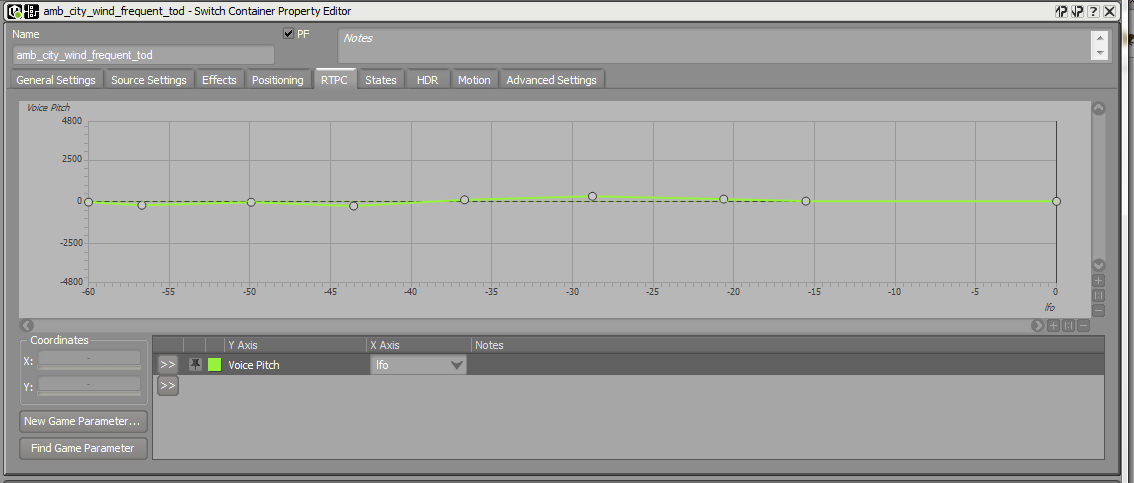

1). Modulation RTPC:

This is the simpler method, although somewhat limited in it’s dynamism. Simply create an RTPC linked to a global timer, and once the timer reaches it’s max, it resets itself to 0. I’ve called mine “modulation” with a min of 0 and a max of 100 (units being set in the engine as seconds). I can then draw an rtpc curve for modulation on any sound I want and affect the pitch, volume, lpf, etc. over time (friendly reminder: subtle pitch changes are WAY more appealing than extreme pitch changes).The most important factor here is to remember to have your values at 0 and 100 be identical, so there’s no pop in the loop. The obvious drawback to this solution is that the modulation is uniform with no possibility of change per cycle. However with a 100 second loop, you have a fair bit of time to build a dynamic modulation curve whose looping won’t be easily detected by a user.

2). Using a tremolo effect as an LFO:

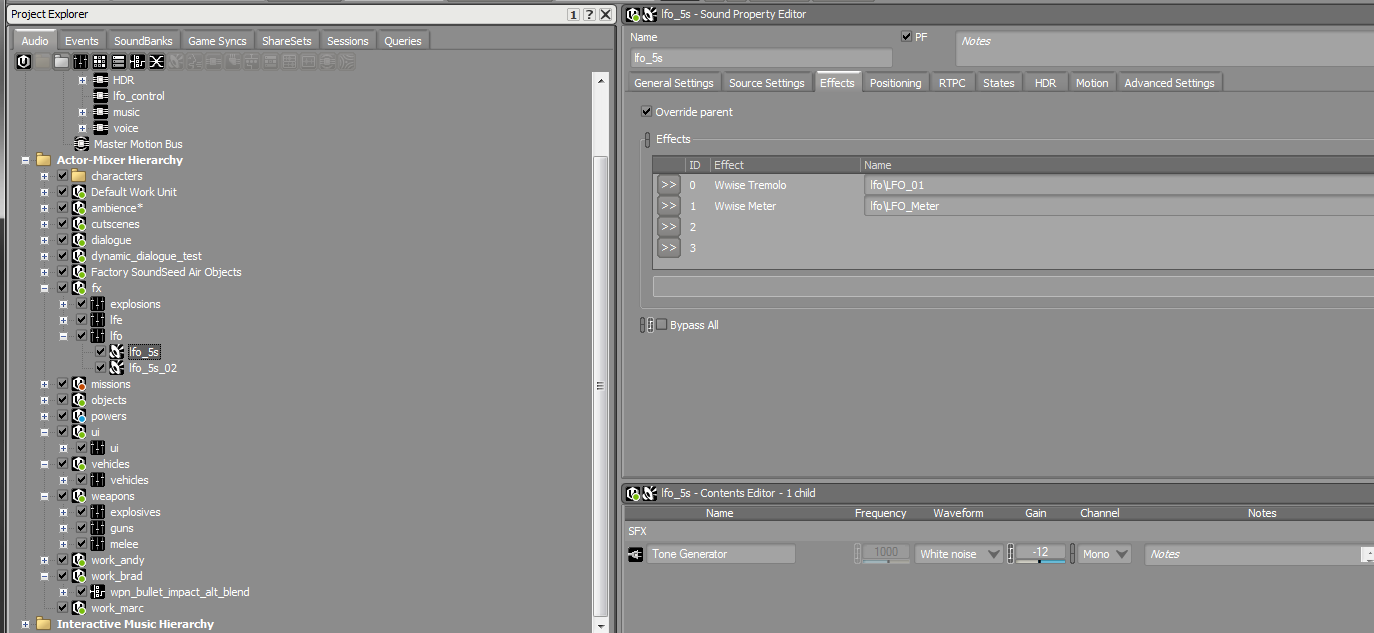

This solution comes from Steven Grimley-Taylor who posted about it on the Wwise forums, and is nothing short of a brilliant use of the tools available in Wwise to make an LFO a reality. It also has some limitations, which we’ll discuss in a bit. The basic gist of this concept is to create a white or pink noise sound generator and sidechain it to a tremolo effect. As Steven explains it:

“Create a Sound SFX object with a Tone Generator Source set to White Noise. Then add a tremolo plugin and then a metering plugin which generates an RTPC

The tremolo becomes your LFO control and you can map it anywhere you want. It becomes unstable at faster speeds, but then this is probably not the best solution for Audiorate FM. For normal ‘modulation’ speed LFOs it works a treat.

You can also go into modular synth territory by creating another of these LFO’s and then modulating the frequency of the first LFO with the amplitude of the second.

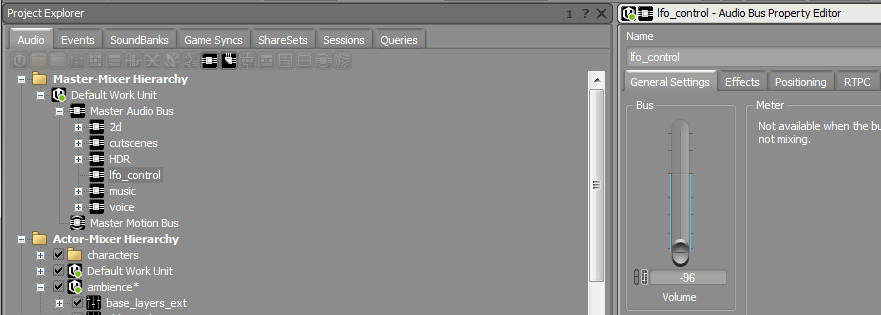

Oh the LFO audio should be routed to a muted bus, you don’t actually want to hear them, just generate a control RTPC”

I’m currently using a couple of these in my project and it works great, the only drawback is that using the tone generator plus the tremolo per LFO isn’t super cheap (~2 – 3% of CPU), and the more modulators you want to add, the more expensive it gets. But you can drive the parameters of the LFO from other rtpcs, opening up enormous avenues of creativity and evolving sounds. It’s a really nice way to spice up some bland looping sounds and give them a bit more life.